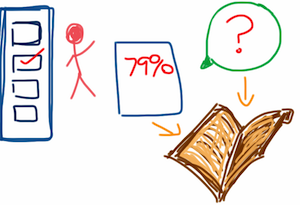

For an ePortfolio based program, what value is there in Multiple Choices Quizzes and reflecting on them?

For me, one of the things that is really exciting about the potential of the current curriculum development and reform of general education at UWO is the notion of a student collecting stuff throughout the duration of their studies, reflecting on it as they go, and then building on that experience and content as part of the capstone element of their major. There are lots of issues still to be explored and developed into practice in the initiative, but one which we’re beginning to work through is how Multiple Choice Quizzes (MCQ) fit in the context of program ePortfolios.

This post is an effort at gathering my thoughts prior to wider conversations with colleagues. I’ll note that I’m fortunate to work with a number of experienced colleagues who specialize in instructional design, assessment, and pedagogy, so this isn’t so much of “I need to figure this out’ as an opportunity to puzzle out some of the questions I have prior to those conversations. As ever the thoughts in this blog represent my only opinions and half-formed thought processes.

The challenge is that some courses for any number of reasons choose to assess their student’s work by using MCQs. The course might have very high enrollment, it might be very content -focused, or have any number of other factors which favor the use of MCQs to assess the course. In this context,

- What is a meaningful artifact/ output from that course for the ePortfolio

- What is a meaningful reflection on it?

Where meaningful is ~useful and appropriate for the student, instructor, and future major (whatever discipline it is).

MCQ as such

I’ll admit that I have a very mixed relationship with MCQ – perhaps scarred by an Introduction to the Old Testament MCQ exam which had negative marking to discourage guessing. After years of physics and chemistry related MCQ exams in high school the leap to not making a best guess was sufficiently mentally painful that they still occupy an odd place in my psyche [It was a better pedagogical approach, but still… ].

More seriously though, a lot of my thinking around course (re)design has favoured approaches to pedagogy that focus on small groups and don’t particularly favour MCQs. To get my brain thinking more usefully I wandered over to see what had been written about this, and in particular to take a look at David Nicol’s “E-assessment by design: using multiple-choice tests to good effect” Journal of Further and Higher Education vol 31, no , Feb 2007, 53-64 – available on the REAP site or via Taylor&Francis.

Nicol’s paper addresses a number of issues and offers case studies but at it’s heart offers an approach to adopting the seven principles of good feedback from Nicol and McFarlane-Dick’s 2006 paper “Formative assessment and self-regulated learning: a model and seven principles of good feedback practice” (Studies in Higher Education 31 (2), 198-218) to MCQs. The suggestions are well thought through but as they largely focus on developing the role of MCQs in formative assessment as part of a course redesign, the pattern of thought and process was perhaps more directly beneficial than the paper (on this occassion).

As summarized in Nicol (2007), the seven principles of good feedback practice are:

- “helps clarify what good performance is (goals, criteria, standards);

- facilitates the development of self-assessment and reflection in learning;

- delivers high-quality information to students about their learning;

- encourages teacher and peer dialogue around learning;

- encourages positive motivational beliefs and self esteem;

- provides opportunities to close the gap between current and desired performance;

- provides information to teachers that can be used to help shape teaching.”

Although courses might develop over time to use MCQs in the ways that Nicol outlines, in the short term the underlying principles of good feedback practice may help inform what artifacts are going to be meaningful. They also bring to mind the more other questions which may point to what a meaningful artifact would be – such as, what does this course do? How does it see itself in relation to other courses in the program? What does success in this course demonstrate? and how can its learning objectives and feedback structure be captured?

Artifacts and Reflections

In the context of a wider program of study, ePortfolio artifacts from a particular course fulfill a variety of purposes [my current impression/ understanding of program intent / last best guess]: they provide basic content for the program framework filling out the outline of progress through the program and providing fodder for the reflective components of the program; the process of curating artifacts (granular stuff, assignments, and reflections) may help students think about their progress and the process of their learning; they also provide assessable things with a declared alignment to institutional, program, and course learning outcomes.

So can a course with 100% mcq assessment provide a meaningful ePortfolio artifact?

My initial thought was that the reflection piece would obviously be able to do that. However, there are a couple of problems with that – what would it reflect on? and how would it do so in a meaningful way which didn’t aggravate the problem which MCQ assessment is trying to address? In part at least I suspect MCQ is a response to class scale and instructor workload, so an approach which suggests that instructors have to mark 300 more essays isn’t really helpful.

It is tempting to suggest that reflecting on the process of studying and taking the MCQ would be useful, but as a colleague noted, that may be irrelevant for the discipline and would be hard to make meaningful. What then might be of disciplinary relevance? It perhaps comes back to asking two questions: 1) How does the course see itself aligning with the University Learning Outcomes (which are discipline independent)? and 2) What role does the course and its assessment play in the program – both in terms of content and in terms of aligning to its own learning outcomes?

One option might be that the mcq and feedback could be structured to give students standardized information about the type and topics of questions they struggled with – and students could reflect on that, but (aside from the potential work in setting that up) I’m not sure how that ties into the bigger picture of either 1 or 2.

As departments may have already have grappled with these alignments (1,2) to some degree and considered the role a given course plays in a program it seems that looking to those discussions to look for ideas may be useful -the prior discussions may not present immediate and obvious answers but perhaps they offer a key starting point.

In that context perhaps, I’m wondering if a potentially useful tack (which might meet the meaningful and minimal overhead) could be to get students to provide some form of self-assessment – a form response to rubric of some kind looking at issues from those alignment discussions. For example, what has been good in the course? what has been troublesome? how do you think your MCQ exam went? and something about disciplinarity: what has this course taught me about being a ____?

What do you think? What ePortfolio artifacts of longer term use might emerge from a large cohort MCQ assessed course? What role do large scale MCQ assessed courses play in a student’s degree process (and what from that is useful to capture)?